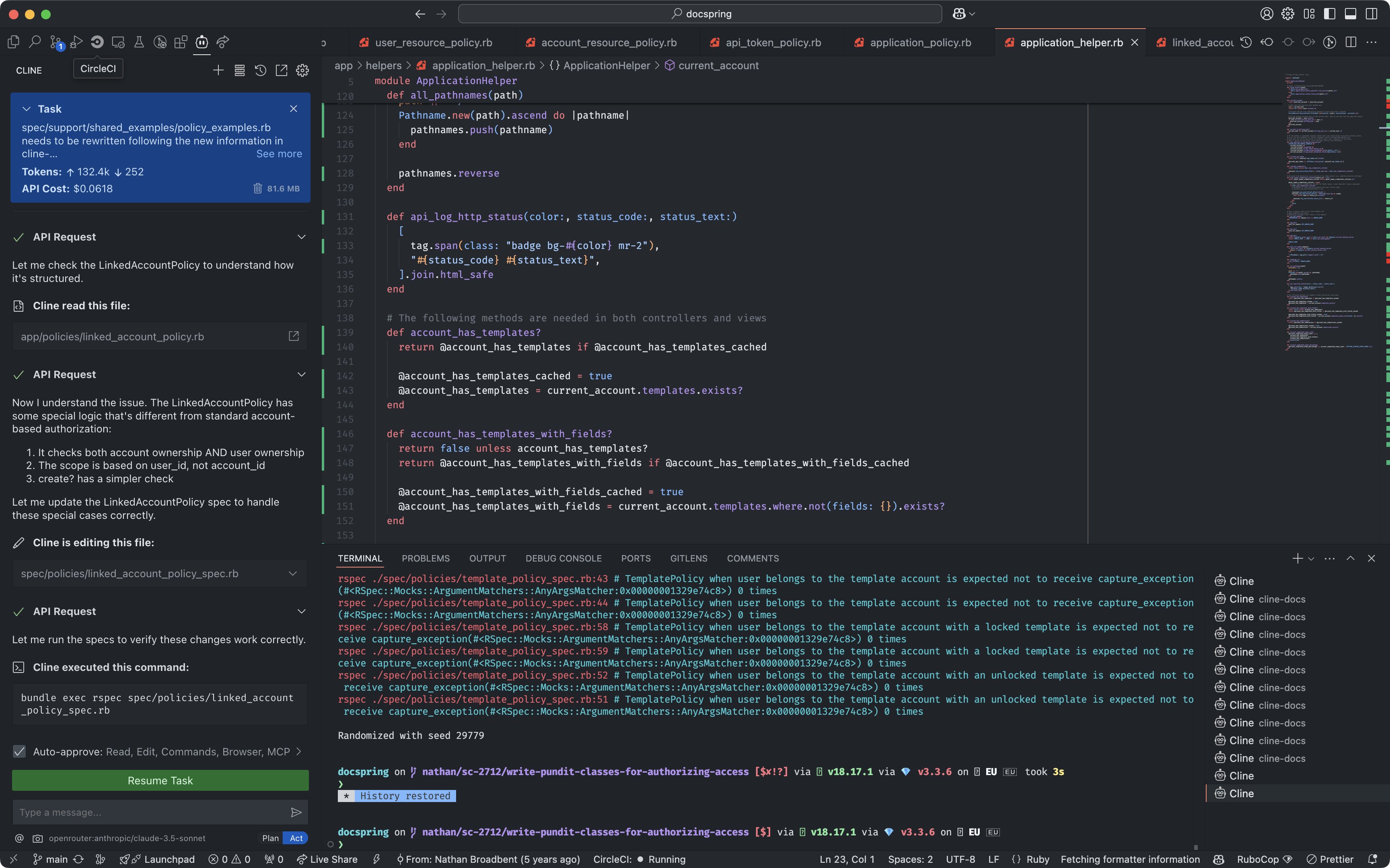

I've been experimenting with AI pair programming over the past week using the Cline VS Code extension.

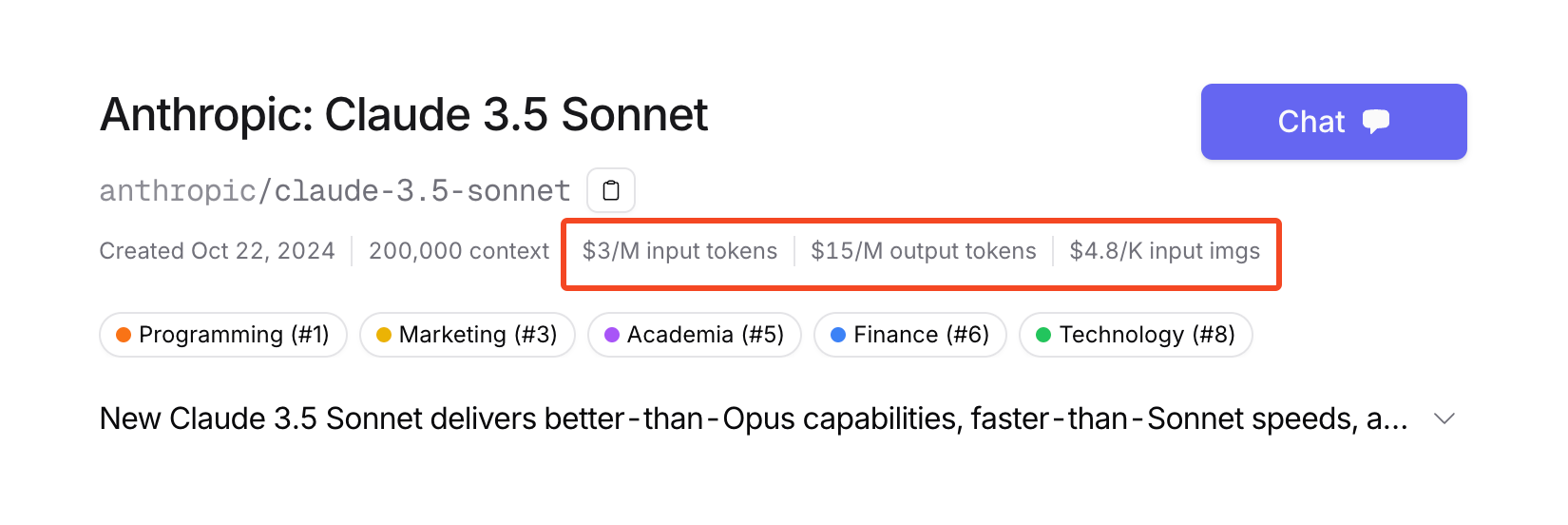

The main AI model I've been using is Claude 3.5 Sonnet from Anthropic. I call the Claude API via OpenRouter, which is a proxy service that makes it easy to switch between various models and providers. Another benefit is that OpenRouter doesn't rate-limit API requests (as long as you keep buying credits), so you can use it all day without any disruptions.

I've spent around $280 on OpenRouter credits over the last 5 days, or about $50 per day. Here's what I've learned so far:

Claude 3.5 Sonnet Is Pretty Impressive

Claude 3.5 Sonnet is very impressive and can do some amazing things. However, working with Claude sometimes feels like pair-programming with a junior-level developer who requires constant supervision. It hasn't been able to stay on track or finish any complex tasks without a lot of help. Claude is excellent at writing boilerplate code, unit tests, and even some pretty complicated algorithms. However, it struggles to work with our large, complex codebase, and it hasn't really been able to finish any big tasks independently.

The good news: My job is safe. (For now. Probably not for long.)

Here are some of the tasks we worked on together.

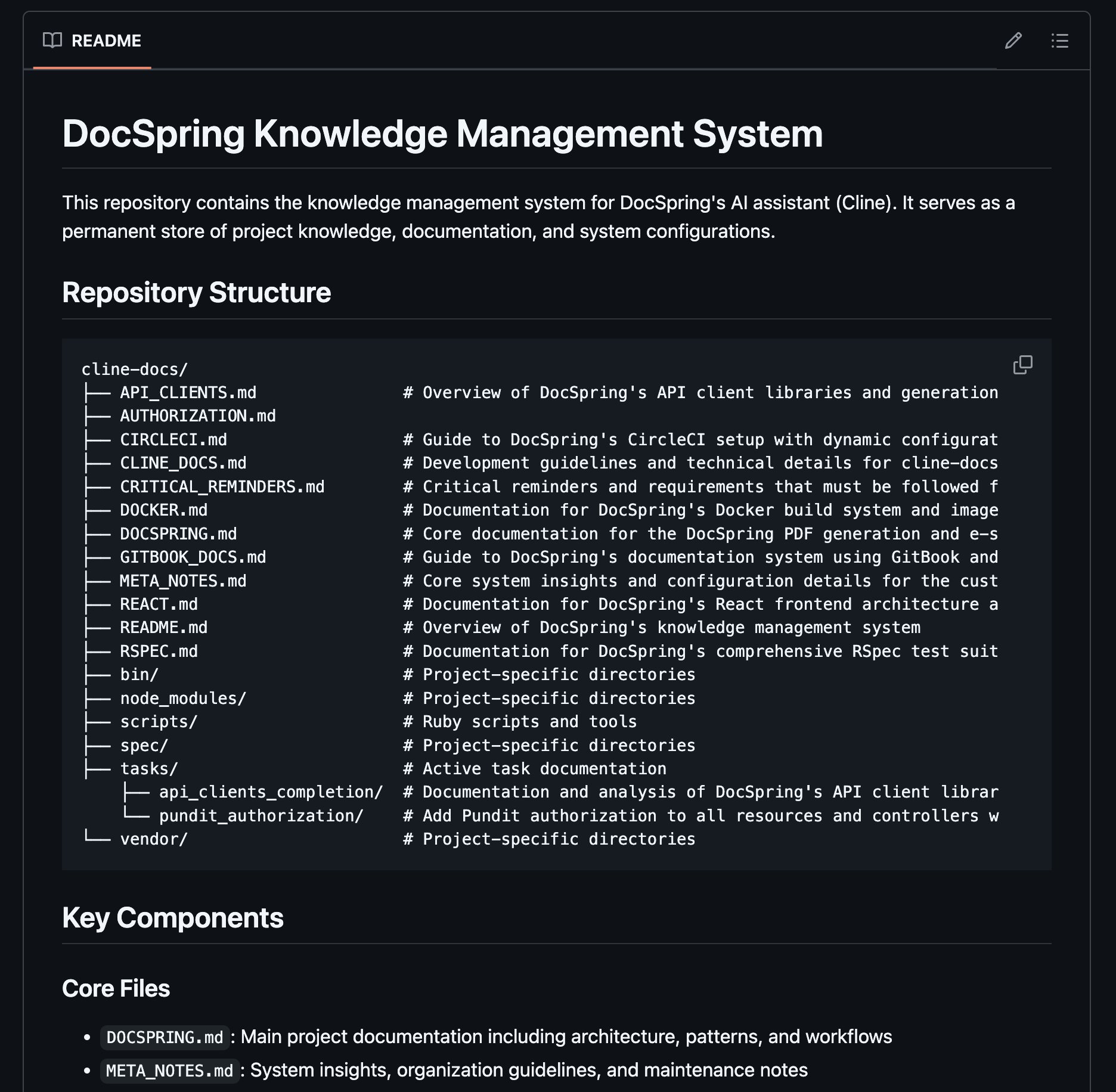

Task 1: Self-Maintained Documentation

One area where the AI shined was in writing documentation. One of the first tasks we worked on was to create a cline-docs git repository where it could store documentation, notes, and memories.

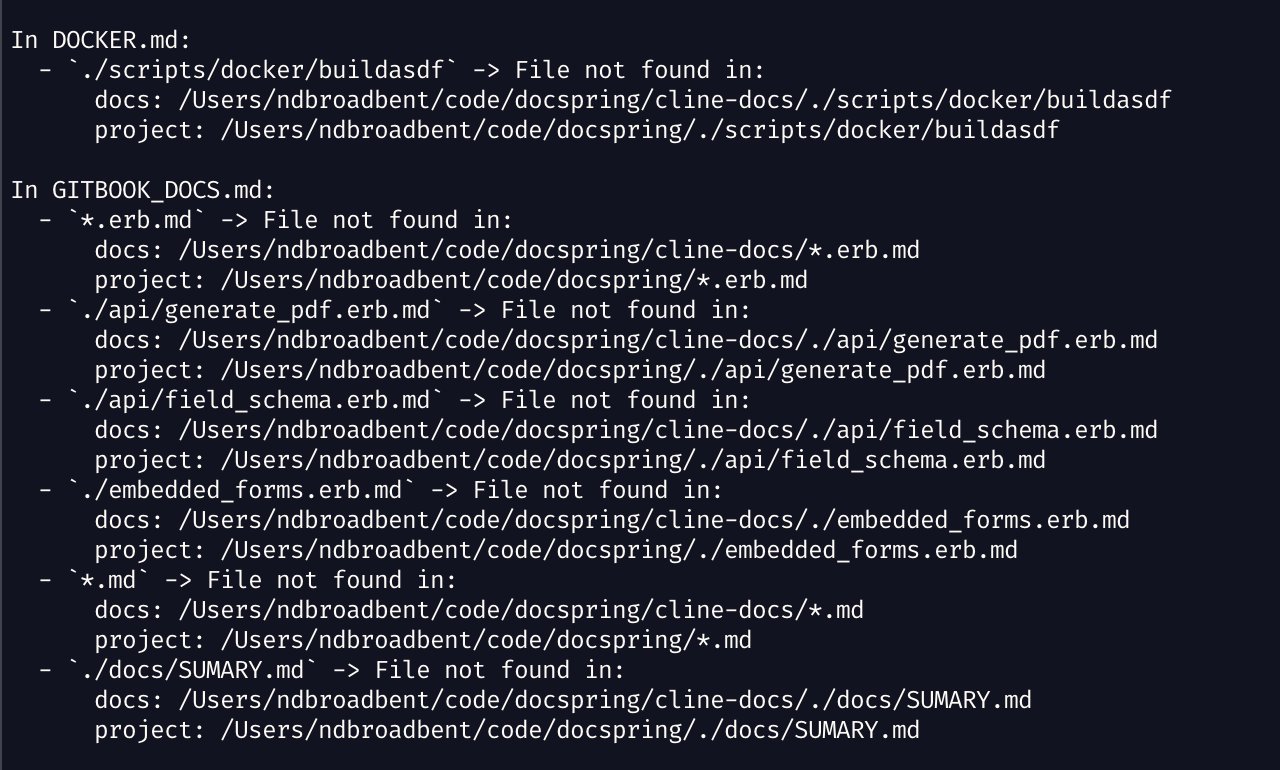

We wrote a script to generate its own "custom instructions" prompt, which includes dynamically generated indexes of important files. We also added a linting pass that scans the docs for links to missing files, which ensures that all the documentation stays accurate and up-to-date.

Claude produced lots of great documentation that will be very useful for other developers in the future (both humans and AI).

Task 2: Automated Package Updates

I wanted to automate some Linux package updates, similar to what Dependabot does. This task took about 6 hours to complete with Cline / Claude.

The goal was to set up a scheduled job that runs a few times a day, checks for new package versions, and opens a new pull request if necessary. If there is an existing PR for an older version, it should close that one and supersede it with the newer version.

Claude spent a lot of time stuck on 404 errors from the Debian snapshot repository. It couldn't figure out the correct URLs, and couldn't figure out how to update our Dockerfiles. It spent lots of time adding debug logging and testing curl requests. It also struggled to understand our CI setup and made numerous errors with the CircleCI config syntax. But we got it done!

This task ended up costing about $50 in OpenRouter credits. In hindsight, I think I might have been able to finish this faster by myself (with GitHub Copilot.)

Task 3: The Pundit Refactoring Nightmare

I decided to test Claude's capabilities by asking it to refactor our authorization logic. We were already using Pundit for a few models, but the rest of our authorization logic was scattered around various controllers and models. I thought this would be a straightforward task for an AI. I couldn't have been more wrong.

I was hoping that it would look at our controllers and models, figure out how we're currently authorizing users, then move all this logic into individual Policy classes. Then write some tests for the policies, and fix any existing tests that were broken.

We spent the whole day working on this task. The initial commits resulted in 104 new/changed files and 3,618 new lines of code. Unfortunately Claude left me with a huge mess to clean up. It constantly made bad assumptions and misunderstood critical details. It was producing broken code, and hallucinating methods and user roles that didn't exist. All of the policy classes needed to be manually verified and fixed by hand because I can't trust anything that it wrote.

Well, at least it's a start.

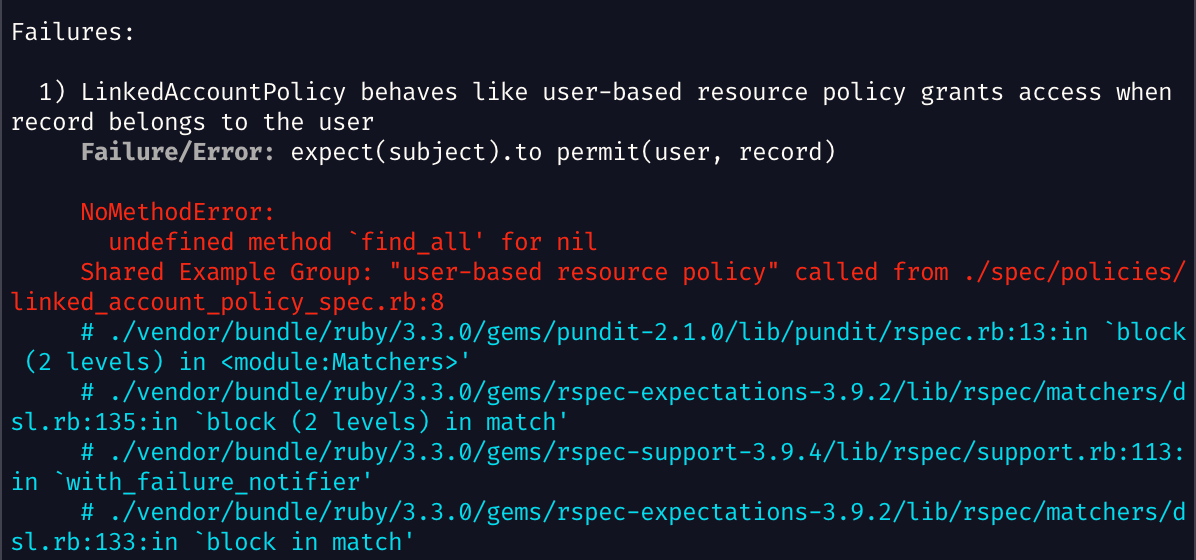

We ran into a test failure that stumped all the AIs, including Claude 3.5 Sonnet, DeepSeek-R1, and OpenAPI o1-preview.

The test was crashing with an error: NoMethodError: undefined method `find_all' for nil

An AI would start by reading the policy_examples.rb file and looking at the test case:

RSpec.shared_examples 'user-based resource policy' do

subject { described_class }

include_context 'with policy setup'

let(:record) { create(record_name, user: user) }

it 'denies access when user is not authenticated' do

expect(subject).to_not permit(nil, record)

endThen they would read the spec file and see this line:

it_behaves_like 'user-based resource policy'And that's all the information they had to go on.

It's not clear at all where the error is coming from. find_all doesn't even appear in any of these files. Where do you go from here?

The AIs would try all sorts of different things. They would make random changes to files, move things around, and rewrite the test. Nothing would fix it. They just went round and round in circles.

I was surprised that none of them even tried to search the codebase for find_all. And none of them tried running rspec with the --backtrace flag. Maybe they weren't aware that RSpec only shows you lines from your codebase by default.

Here's what happens when you run rspec with the --backtrace flag:

This backtrace shows that the error is coming from the pundit gem itself:

./vendor/bundle/ruby/3.3.0/gems/pundit-2.1.0/lib/pundit/rspec.rb:13:in `block (2 levels) in <module:Matchers>'Here's the relevant lines in that file:

module Pundit

module RSpec

module Matchers

extend ::RSpec::Matchers::DSL

matcher :permit do |user, record|

match_proc = lambda do |policy|

@violating_permissions = permissions.find_all do |permission|

!policy.new(user, record).public_send(permission)

end

@violating_permissions.empty?

endGreat, we found the call to find_all. So that must mean that permissions is nil. A quick look at the Pundit documentation shows that we're supposed to be wrapping the permit call inside a permissions block, like this:

describe PostPolicy do

subject { described_class }

permissions :update?, :edit? do

it "denies access if post is published" do

expect(subject).not_to permit(User.new(admin: false), Post.new(published: true))

end

end

endThat's why it was crashing with undefined method `find_all' for nil. We never defined any permissions.

To be fair, it's a terrible error message! I was going to open a PR to add a better error, but @Burgestrand beat me to it a few months ago:

Unfortunately this change hasn't been released. But even if it was, we were still on an older version of pundit (2.1.0). We should have probably updated to the latest version before working on this task. That would have been a good thing for the AI to check!

I'd love to see an AI agent that displays the following behavior:

- Checks if a Ruby gem needs an update before it starts working on a task related to that gem

- Debugs tricky error messages by knowing how to get more information. e.g. printing detailed stack traces, searching the codebase for keywords, Googling things in a browser, reading GitHub issues, etc.

- Realizes that an error message is confusing and should be fixed for everyone who uses the gem

- Suggests that we should fork the gem and open a PR to make the error message clearer

(I've added these tips to our custom instructions.)

Challenges and Limitations

The Cline extension allows you to configure some "Custom Instructions". The UI says: "These instructions are added to the end of the system prompt sent with every request."

However, Claude would never follow these steps, no matter where I put them:

- Read

./cline-docs/META_NOTES.mdin FULL - Use the

read_filetool to read this file - Process its contents COMPLETELY

This was very frustrating. Claude did seem to be aware of other key points in the instructions, so it seems like it was just ignoring them.

DeepSeek-R1 also failed to follow the instructions, but OpenAI o1-preview did read the META_NOTES.md file every single time. I'm not sure why the other models behaved differently.

Claude also seemed to get easily overwhelmed with information and became hyperfocused on tasks. It would start to forget important directives from the prompt and forget details from its internal notes. For example, I asked it to regularly make updates to it's knowledge base in the cline-docs repo whenever it discovered important information about the codebase. It almost never did this unless I reminded it to make a note.

Claude would often write a test and then mark the task as completed. I had to keep reminding it to run the test first and make sure that it passed. (To be fair, I also used to do this when I was a junior developer.)

AI API Costs

I tried OpenAI's o1-preview model a few times. It was very good at following instructions, and pretty good at working on programming tasks. I think it's a bit better than Claude. The only problem is that it's five times more expensive than Claude.

If Claude was costing me $50 per day, then o1-preview would cost $250 per day. That's over $5k a month if I used it regularly.

A Cure For Executive Dysfunction

I discovered that an AI agent can be pretty helpful if you struggle with ADHD. I manage it pretty well, but sometimes I get stuck and it can be very difficult to get started on a task. Especially the boring ones. But now I can just type what I want the AI to do (or paste the ticket from Shortcut), and AI gets the ball rolling for me. It might be a bit slow, and I might have to keep an eye on what it's doing, getting things done slowly is better than not getting them done at all. I might start using AI agents to do the initial steps on a task, and then I'll take over once it gets stuck or goes in the wrong direction.

Conclusion

I'm going to keep playing with Cline and Claude 3.5 Sonnet. I've started to figure out what it struggles with, so I might stop asking it to do those sorts of things. It's still very good at writing tools, scripts, test cases, and documentation.

I might get better results if I fine-tune the model on our codebase and documentation? I haven't looked into that yet and I don't know where to start. And maybe there's an easy way to get it to follow all my custom instructions.

I look forward to the day that I can let an AI agent loose on our backlog of Shortcut stories and Sentry errors. Imagine going on vacation for a week and coming back to a completely empty todo list. Also your whole app has been rewritten in Rust.

I don't know what this all means for our economy or society. I hope it's good.

I expect that this blog post will be out of date by tomorrow morning.