For many years I’ve had this vision in my head: What if every API test, every SDK client, and every code example in our docs all came from the same source? No more drift, no more customers tripping over typos in examples. Just one unified workflow where a single source of truth generates everything.

I’ve been slowly chipping away at this goal while building DocSpring. This year, with the help of AI coding tools, I was finally able to cross the finish line.

Early Steps

We use the RSwag Ruby gem to write our API tests and generate an OpenAPI schema. We then use OpenAPI Generator to turn that schema into API client libraries.

Our auto-generated clients worked relatively well, but our API is asynchronous and PDF generation can take anywhere from a few seconds to minutes. Ruby on Rails isn’t very good at concurrency so we couldn't keep long-lived HTTP connections open. So we had two options: webhooks and polling.

- Webhooks: Our server tells your server when the PDF is ready.

- Polling: Instead of one long HTTP request, you make a series of short HTTP requests in a loop until the PDF is ready.

Webhooks are still the best way to integrate with DocSpring, but it can take a lot of work to build a reliable webhook integration. You have to split your PDF generation workflow into asynchronous steps, track the state in your own database, handle retries and idempotency, and schedule code to run in the future that follows up in case you don't receive the webhook.

I wanted to make the DocSpring API easy to use for the majority of our customers, and I didn't want to force every customer to build their own polling logic. So I built custom OpenAPI Generator extensions that would add polling logic directly into each one of our API client libraries.

This was a terrible idea. These extensions were an absolute nightmare to maintain. Every update to OpenAPI Generator could break a client library. Keeping it consistent across languages and in sync with our documentation was exhausting. We only had a few automated tests to ensure that our API clients were working, so a lot of bugs slipped through the cracks.

The First Breakthrough: Synchronous API Proxy

The turning point came when I finally decided to pull all that polling logic out of the client libraries and centralize it. Instead of building generator extensions or asking every customer to handle async polling themselves, I built a synchronous API proxy service in Go. Our proxy handles the waiting, retries, and responses automatically.

We launched our new synchronous API subdomains:

- US:

sync.api.docspring.com - EU:

sync.api-eu.docspring.com

With these new subdomains, customers can finally make a single long-lived HTTP request and wait for the PDF to be ready. The response returns a download_url so no extra polling code is required. We could finally delete all our custom OpenAPI Generator extensions and release simple client libraries that used the synchronous API by default. We also added an optional ?wait=false parameter for those who still preferred async responses.

Going Even Further

I began writing some more integration tests for our API clients. I would write each test by hand for nine different programming languages, and I tried to cover most of our important API endpoints. It was still unmaintainable and exhausting.

But then I remembered that we already have a set of RSwag tests that defines our OpenAPI schema. Would it be possible to run those same tests for all of our API clients? What if I wrote a code generation system that hooks into RSwag and swaps out the test executor with a custom test harness for each language? Then every time an RSwag test runs, we could get it to call our real API client methods with real parameters, get them to print the result as JSON, and run all the same test assertions.

After a lot of hard work and a lot of help from AI agents, I finally got it all working. We now have a setup where a single RSwag test drives everything. Here's what one of our tests looks like:

path '/folders/' do

get 'Get a list of all folders' do

tags 'Folders'

operationId 'listFolders'

description <<~DESC

Returns a list of folders in your account. Can be filtered by parent folder ID to retrieve

subfolders. Folders help organize templates and maintain a hierarchical structure.

DESC

security [api_token_basic: []]

consumes 'application/json'

produces 'application/json'

parameter name: :parent_folder_id,

in: :query,

type: :string,

required: false,

description: 'Filter By Folder Id'

response '200', 'filter templates by parent_folder_id', code_sample: true do

serializer_list_schema FolderSerializer

let(:parent_folder_id) { folder2.uid }

run_test! do |folders|

expect(folders.count).to eq 1

expect(folders[0]['id']).to eq subfolder1.uid

expect(folders[0]['name']).to eq subfolder1.name

end

end

end

end

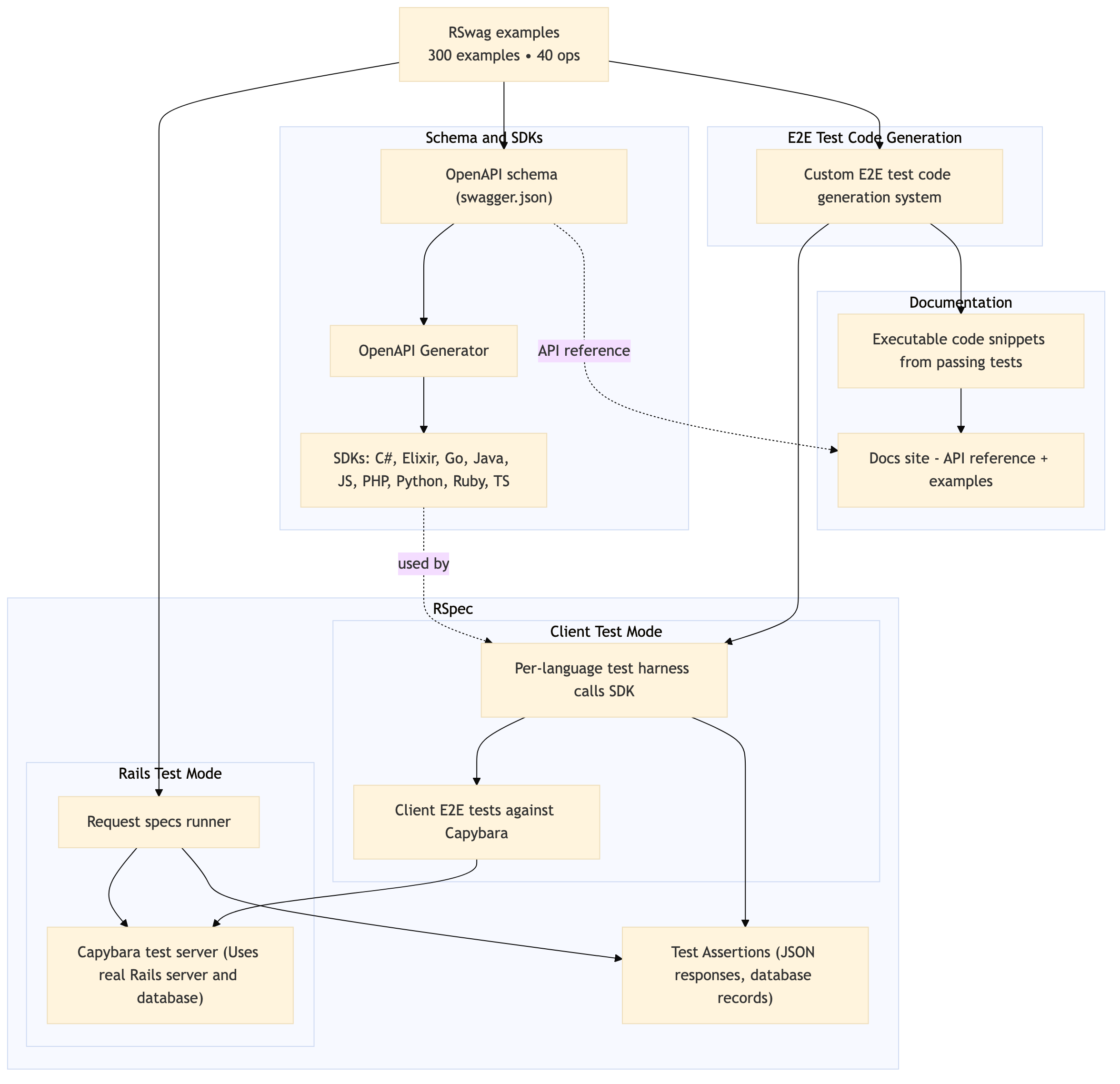

From this single test definition, we get:

- An RSwag test that defines our API behavior and expectations. We validate the JSON responses and make sure the correct records were created or updated in the database.

- Our OpenAPI schema

- Automatically generated client SDKs in nine programming languages

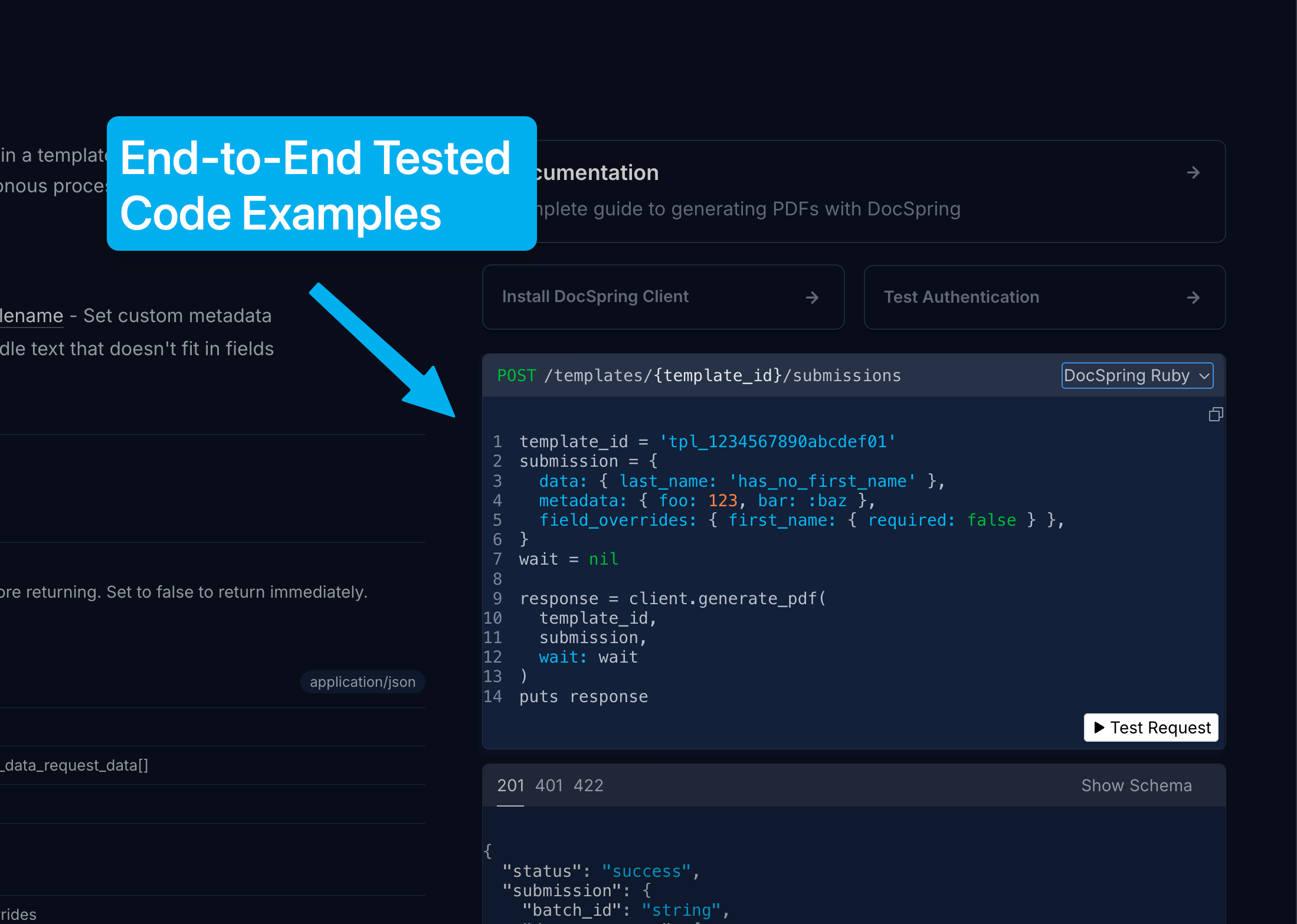

- End-to-end API client tests that run our real API client libraries with exactly the same expectations. We generate API client test code that hits a real Capybara test server and returns JSON responses.

- 100% tested code examples in our documentation. When you view code examples in our docs, those are the same lines of code that were used to run our end-to-end tests.

We have exactly 300 RSwag examples that test 40 different API operations. These tests are run in two different modes: once as Rails request specs, and then through 9 different API client libraries (C#, Elixir, Go, Java, JavaScript, PHP, Python, Ruby, TypeScript). That’s 2,700 end-to-end tests in total!

Why Test Generated Clients?

You might ask: if the SDKs are generated directly from the OpenAPI schema, why bother testing them at all?

The reality is that code generation doesn’t guarantee correctness. Different languages handle different parameter types in subtly different ways. Some OpenAPI Generator templates have bugs. An updated dependency might crash under certain conditions. And sometimes the schema itself doesn’t perfectly capture the actual API behavior. We’ve uncovered and fixed dozens of issues by running the exact same test suite through all of our generated API clients. Not only in OpenAPI Generator, but also flaws in our own API design. Now we don’t have to wait for a customer to hit an edge case and file a bug report. We catch those problems ourselves before we even release the client library.

A few concrete examples:

- We had defined a

limitparameter for pagination as anumber. In some languages, that was interpreted as a float or decimal, rather than an integer. A generated client produced the wrong type and our tests immediately flagged it. - File upload endpoints to create or update templates from PDFs were broken in several clients. I ended up needing to patch a bug in a Ruby HTTP library (typhoeus/ethon). Without these tests, our customers would have hit these failures first.

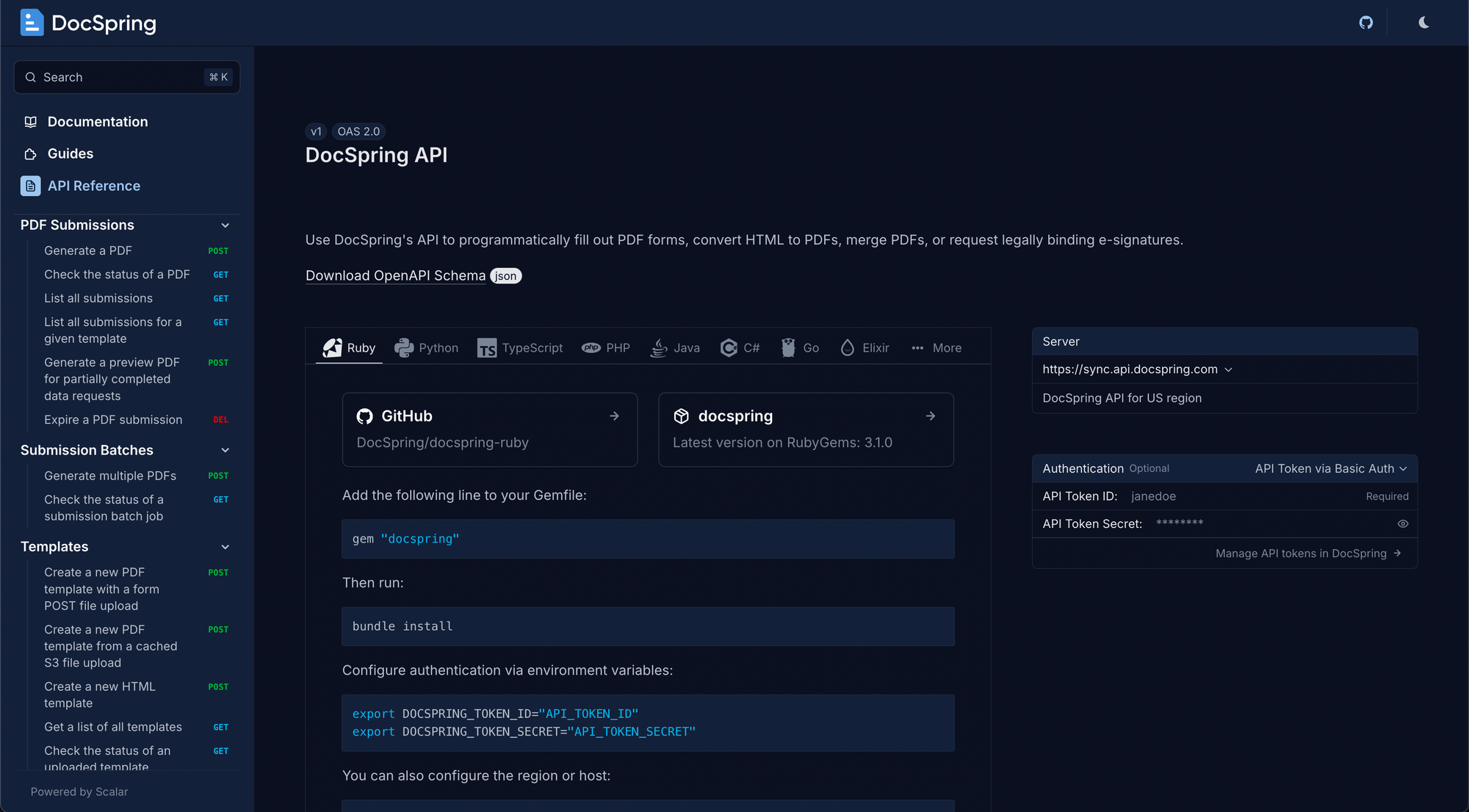

New Documentation Site

This work coincided with another big milestone: we finally migrated from our old GitBook docs to a new documentation site built with Starlight. The new site includes an API reference page powered by a customized version of Scalar.

Acknowledgements

Our end-to-end tested API clients and our new documentation wouldn’t exist without the incredible teams behind these open source projects:

We’ve been proud sponsors of the OpenAPI Generator project for many years. We wouldn’t have been able to build this without it.

Open Source?

The system we’ve built isn't open source yet. It will take some work to extract it from our codebase and publish a framework that other teams can use. Let us know if this is something you’d be interested in using!

Conclusion

This project has been a long time coming. I kept circling back, building pieces, hitting walls, and slowly making progress over the years. AI coding tools finally gave me the extra momentum I needed to tie it all together.

The result is one of the most satisfying pieces of developer infrastructure I’ve ever built. We finally have API tests that don’t just verify correctness, but also generate our OpenAPI schema, our client libraries, the end-to-end tests for our client libraries, and all the code examples in our documentation.

You can view the code examples in our new API reference docs: https://docspring.com/docs/api